AR Prototype for NASA

A few years ago, Retired NASA Astronaut and Distinguished Educator in Residence at Boise State University (BSU) Steve Swanson dropped into our development lab and asked if we wanted a chance to develop a Heads Up Display (HUD) prototype for NASA astronaut helmets. NASA would use this application prototype for Extra-Vehicular Activities (EVAs) on the International Space Station, and eventually on the Orion spacecraft, the Lunar Gateway, and in Mars missions.

Problem Description

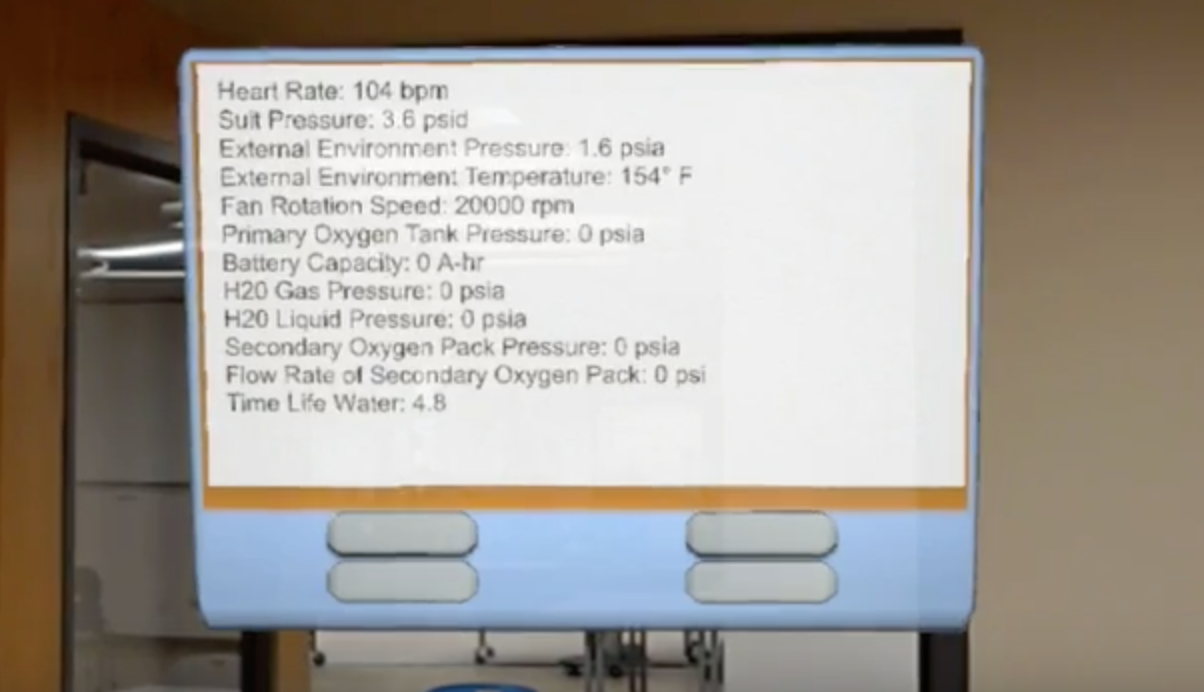

Spacewalks on the International Space Station (ISS) are dangerous and expensive, but necessary for upkeep and research. Mechanical failures, time constraints, loosing one's bearings, and life support problems can happen suddenly. Currently, astronauts use laminated instructions attached to their wrist and radio communication with Mission Control to supplement their training while navigating to a work site, building, doing repairs, and performing experiments. Because of this, valuable time can be wasted when things go wrong, or visual communication isn’t possible. The EMU (Extravehicular Mobility Unit) or spacesuit, astronauts wear were instituted in 1981, and were enhances for safety in 1998. Currently, there are a total of 11 EMU’s in working order.

ARSIS 1.0, built on Microsoft HoloLens using C# Unity, is our response to the NASA SUITS challenge. Our goals in creating ARSIS 1.0 were:

1. Improve safety

2. Increase crew member autonomy

3. Expand effective communication between Mission Control and the crew members

The Team

An all student team from Boise State University mentored by a Retired NASA Astronaut, a passionate engineer, and a HCI (Human Computer Interaction) doctorate.

My Role

Lead UX/UI Design Team

Collaborate with and teach 2 Junior Designers

Work with 6 Developers, 2 NASA Mentors, 1 HCI Mentor, 2 Project Managers (based at Johnson Space Center (JSC) in Houston, TX), and 3 Project Interns (JSC)

Take project from brainstorm to handoff

ARSIS 1.0 on a Timeline

Meet with Mentors

Assess challenges

Create plan

Create Interface

Iterate

Create Testing Plan

Testing at BSU

Testing at JSC

Wrap project, and hand off to NASA SUITS at JSC

The Chance of a Lifetime

Regular video meetings with the folks at JSC and other teams around the country began, and we brainstormed the starting point of the project. As this was something that had not previously been prototyped, our team interfaced with our mentor, Steve, the NASA SUITS Educational Team at JSC, and the UX/UI and development teams to collaborate on the unique and complex design problem. Designing and implementing something so new it hadn't ever been done before was indeed a challenge.

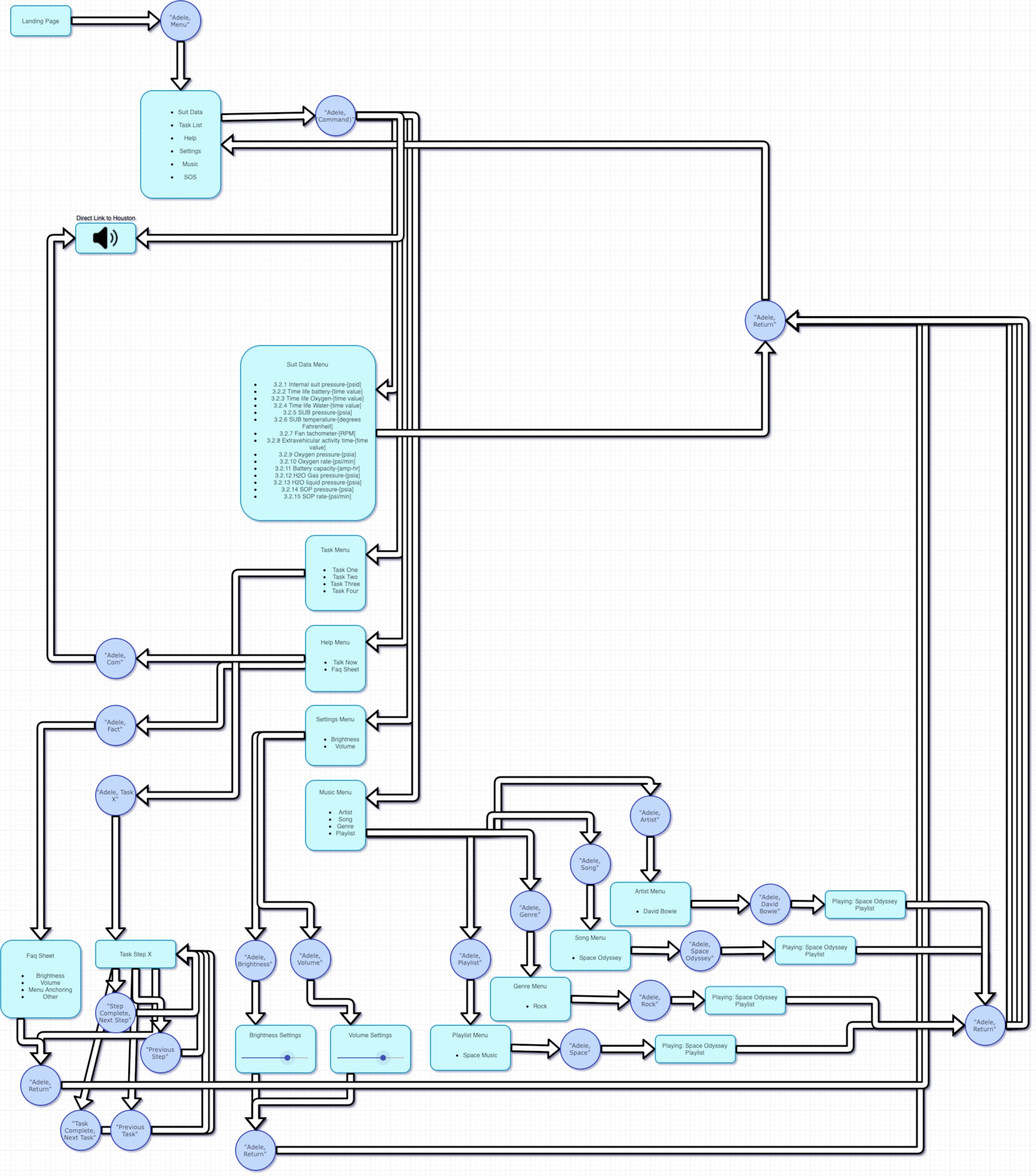

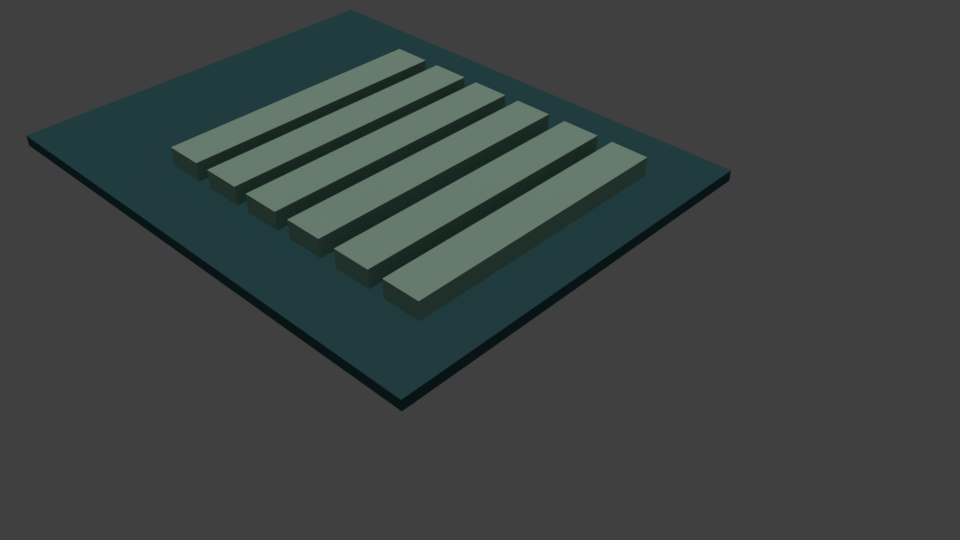

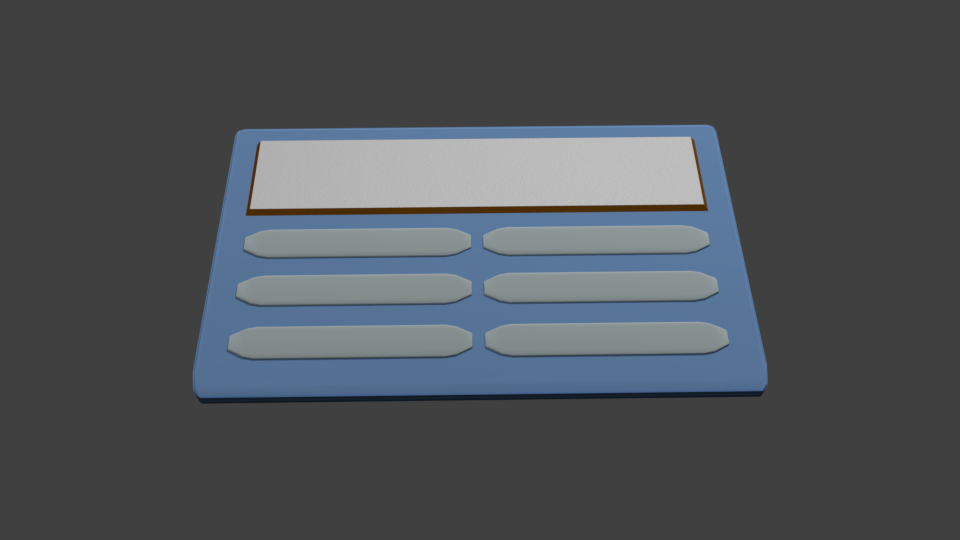

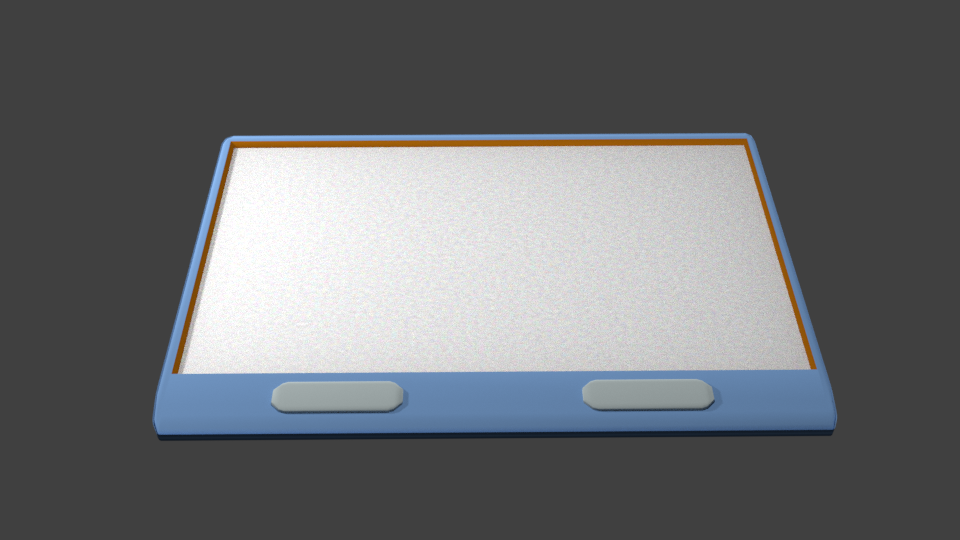

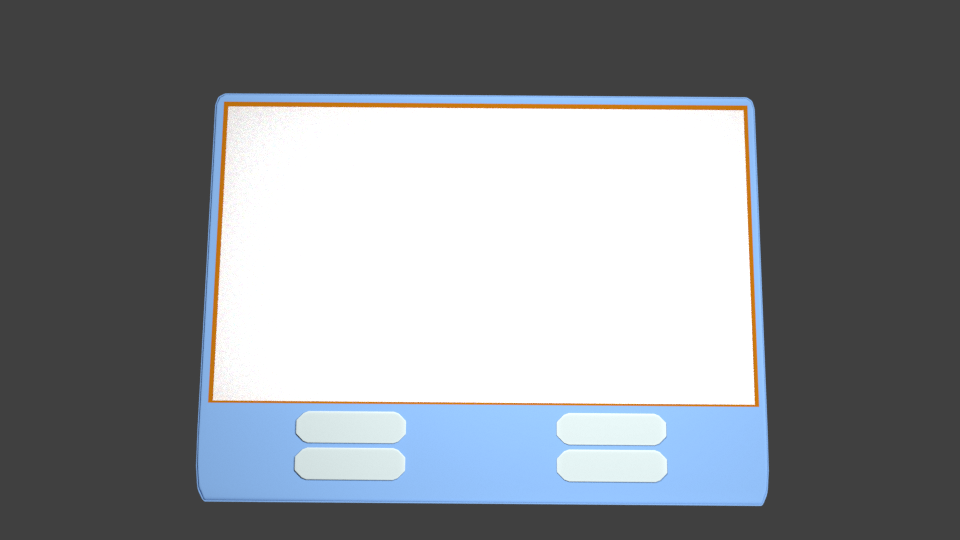

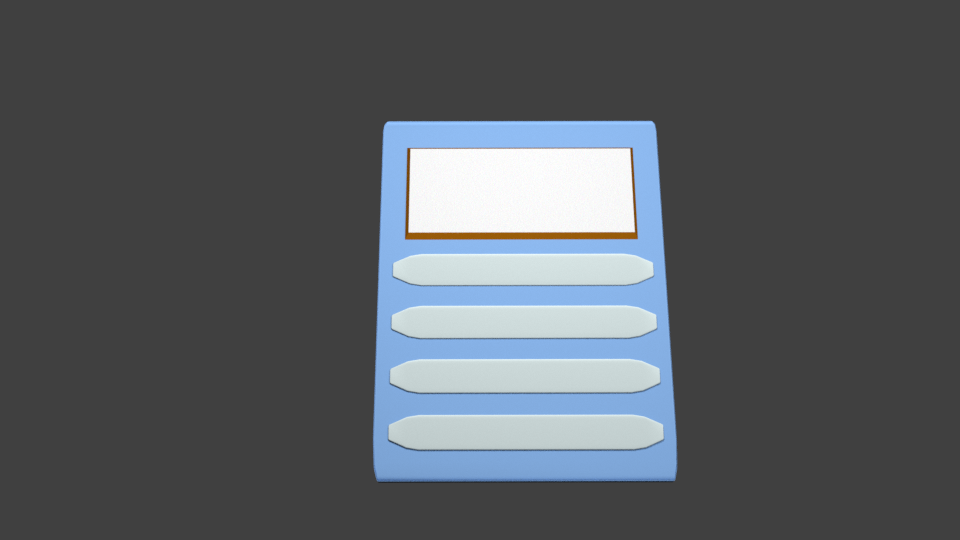

Initial 3D 'Sketches'

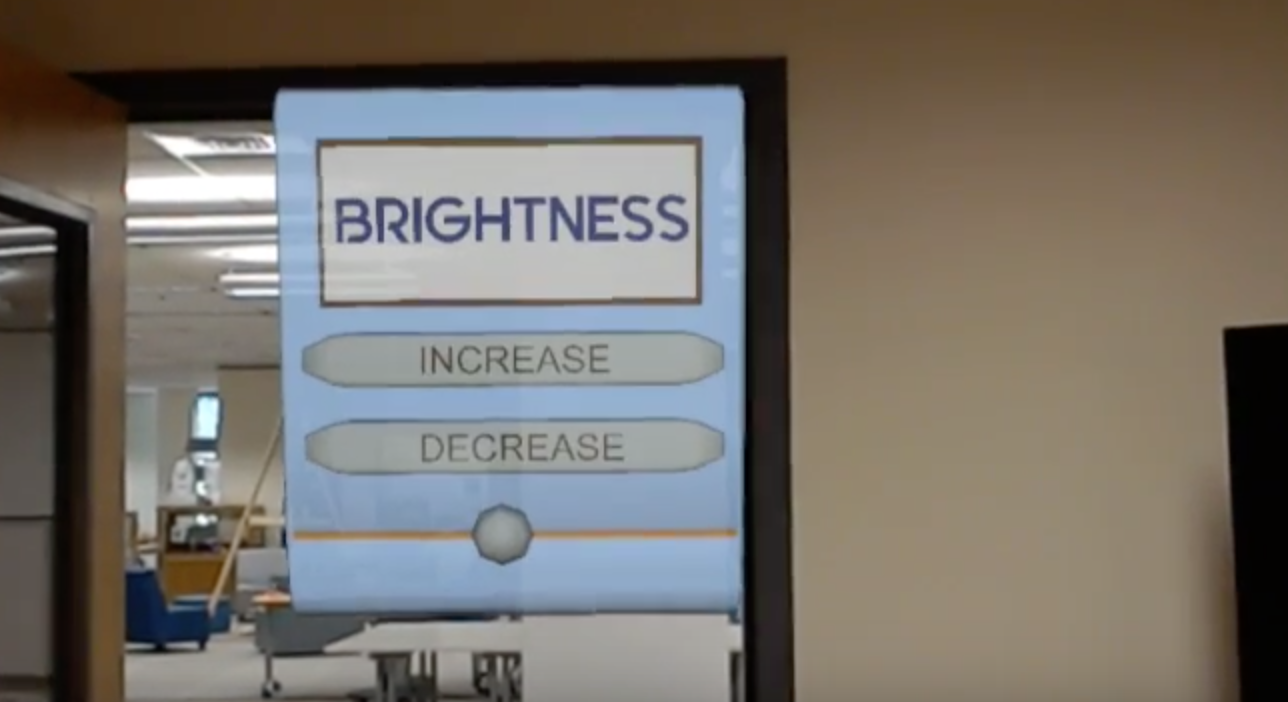

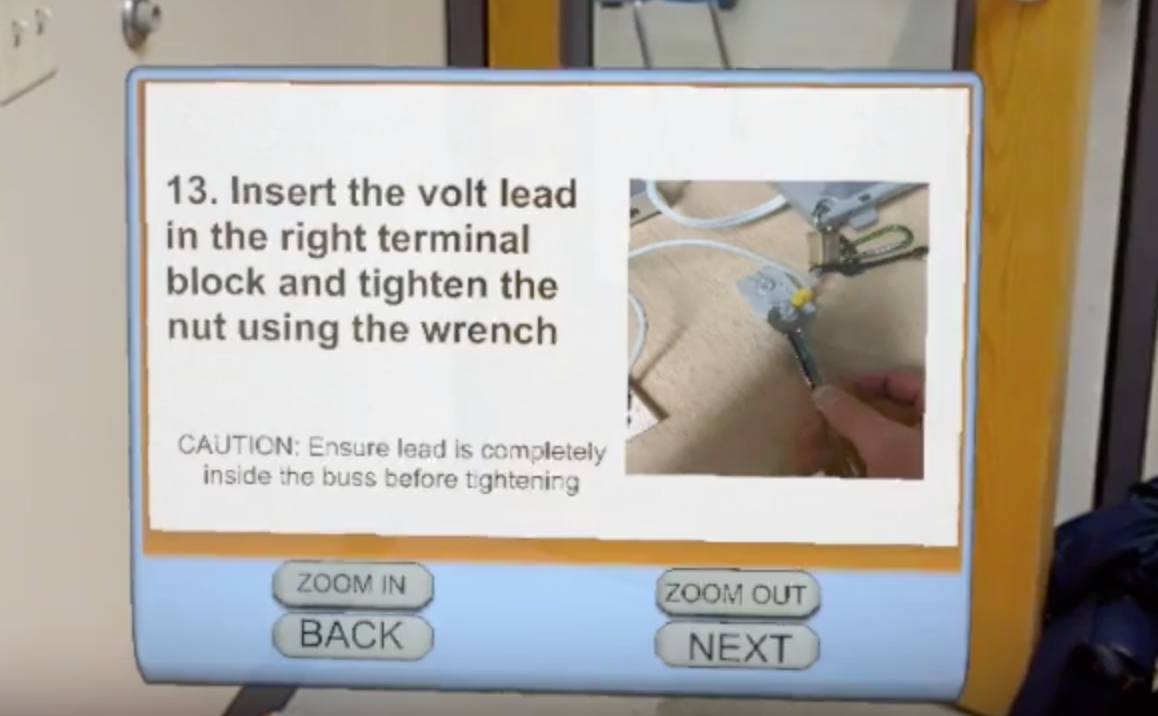

After a lot of consideration and research I put together 3D interface prototypes, and a GUI. We decided to use a 3D modeled interface, and based it off of the look and feel of mathematics calculators and large-buttoned tablets, to give users of our target demographic (NASA Astronauts who’s average age ranged between 35 and 65 years old) a reference of what how to interact with the interface. Tools for prototyping included pencil and paper, Adobe Photoshop, and Blender. Once that was finished, I used Blender and Adobe Photoshop to create the models, UV unwrap them, and create textures for each component.

The Interface: Iteration 2

The Interface: Final Iteration

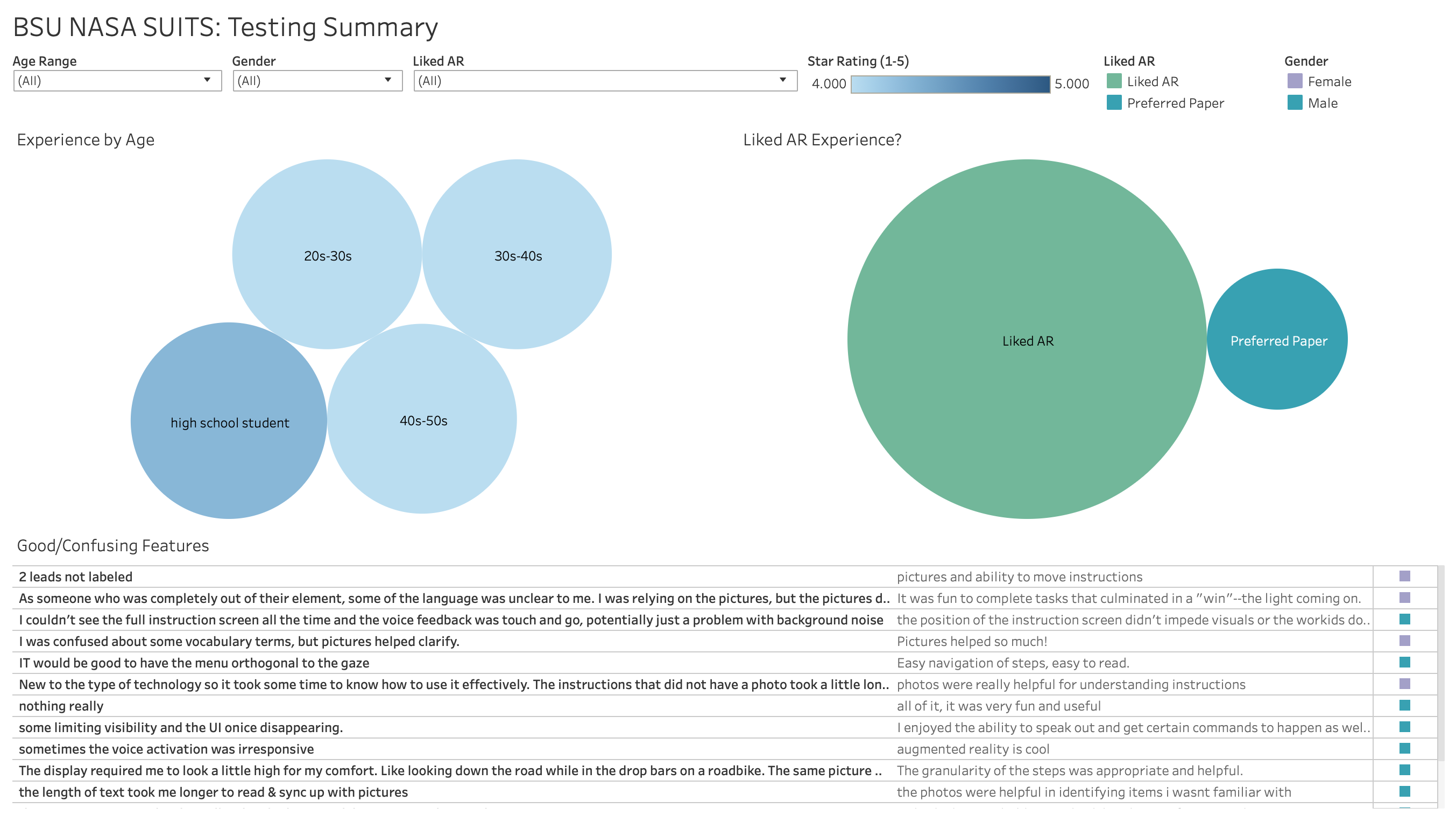

The Testing Process

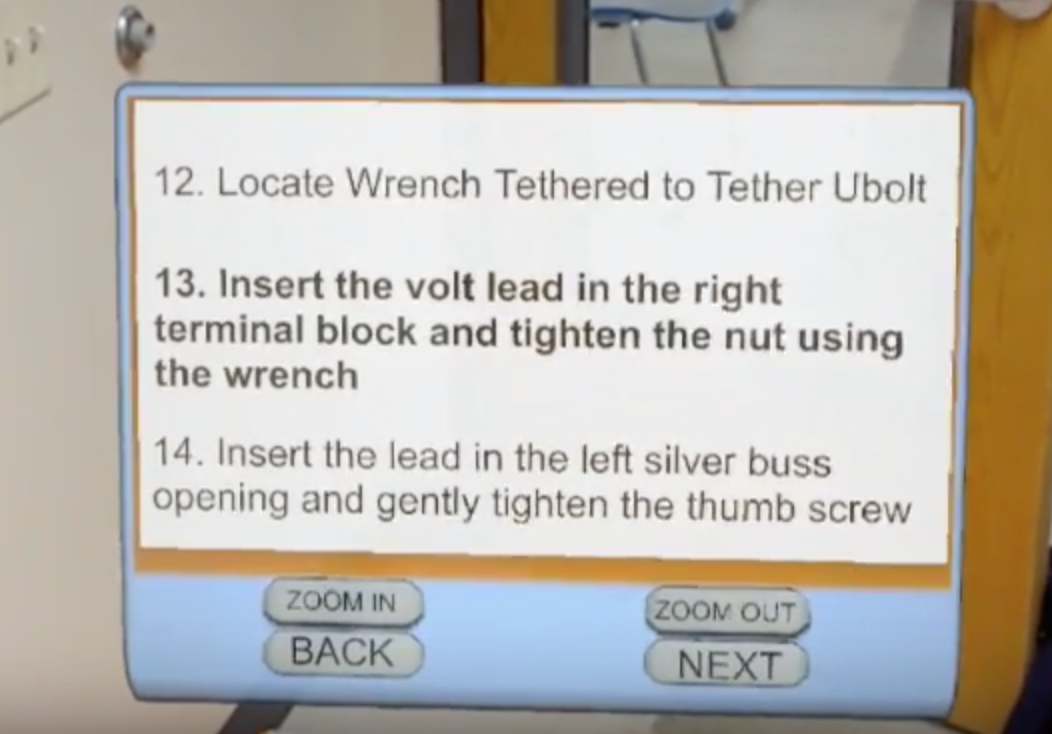

I worked with both teams and our mentors to incorporate the interface and code together, and then worked with a software engineer and the astrophysics consultant to write a user test plan. The test plan included moderated usability testing, surveys, and A/B testing. Beta testing was conducted at Boise State University's annual Bronco Days recruiting event. Volunteer participants within our target demographics (as well as test subjects outside our scope) played through a testing simulation we created to test all aspects of the interface and software.

After that, a specially chosen group from the BSU NASA SUITS Team spent a week at Johnson Space Center in Houston doing further testing, development, and demonstrations under the direction of the NASA SUITS Educational leadership. This testing was conducted primarily at the Human-In-The-Loop testing facility at the HIVE (Human Integrated Vehicles & Environments) Wearables Lab, on NASA JSC campus. The experience was absolutely invaluable, as I got to interact with and see the work of NASA engineers, meet astronauts and cosmonauts, and learn from the amazing people who work at NASA JSC.

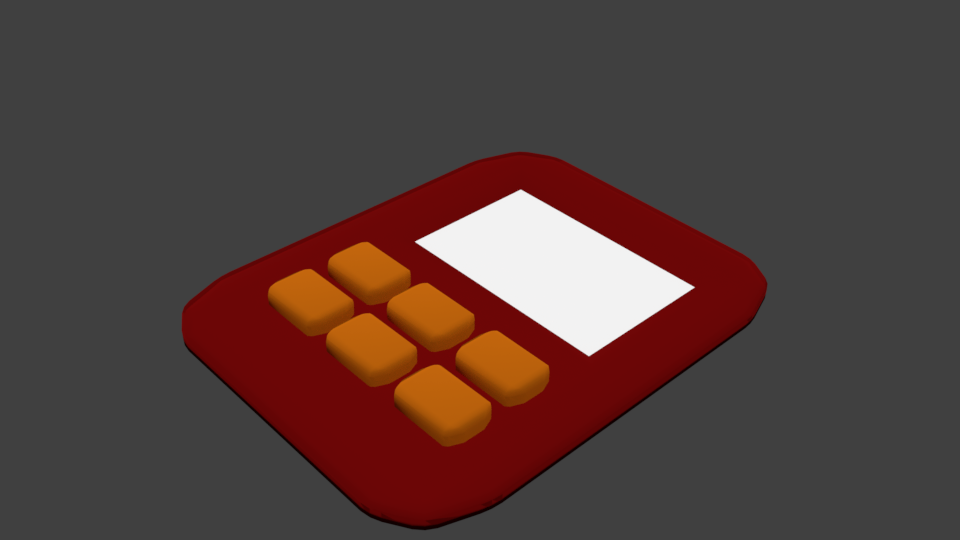

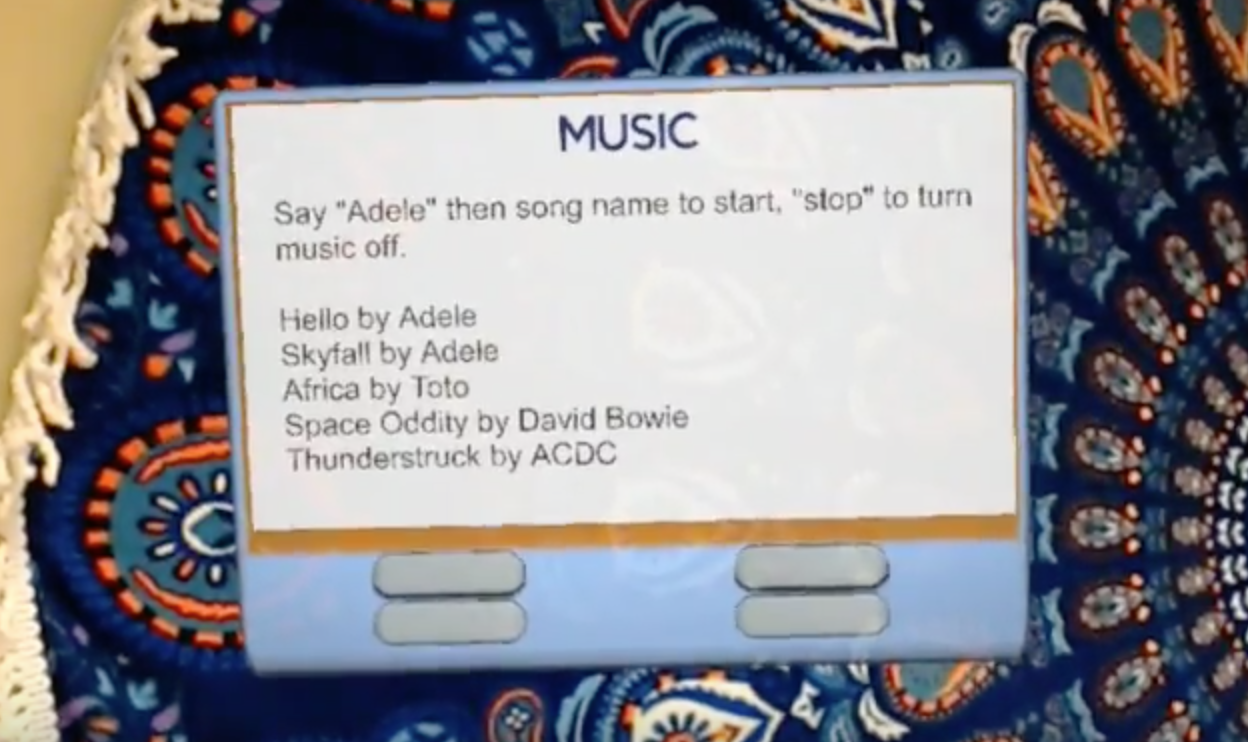

Solutions: ADELE, the Voice Interface

ARSIS uses a voice interface (like Siri or Cortana) we named ‘ADELE' (Audio Device for Extraterrestrial Listening Environments); an homage to Adele Goldstien, one of the original ENIAC programmers. ADELE allows users to with ARSIS without having to use their hands, thus freeing them up for work. This is especially important for astronauts on EVA, because the bulky spacesuit gloves they wear don’t allow for fine motor movements required to manually interact with the AR interface.

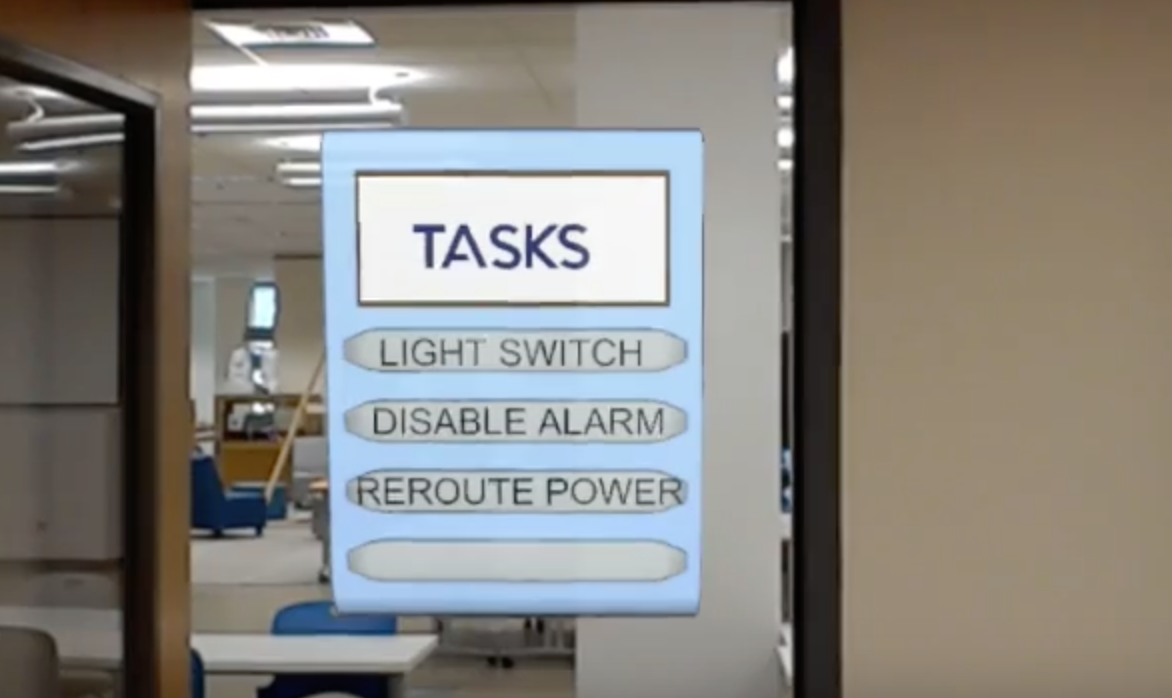

Solutions: Voice Commands bring up Selected Menu

Solutions: Work Flow

For further information on ARSIS 1.0 please watch the video, below.